Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

› Scala - What makes something "strategic"?

You are used to see G-WAN saying that scalability matters on multicore CPUs. Let's hear what the European Union has to say:

"The Popular Parallel Programming challenge is the single most important problem facing the global IT industry. Without significant progress, there is a considerable risk that the underlying business model, in which hardware performance developments enable improved software which drives purchases of new hardware, will be broken. Industry giants, including Microsoft and Intel, are well aware of this, and devote considerable resources to funding research in the area.

The work proposed here describes an ambitious but coherent attempt to address the issues and consequently, if successful, would be of enormous value, both intellectually and industrially."

Putting (taxpayer) money where its mouth was, the E.U. granted 2.3 million Euros to this project aimed at tackling the "Popular Parallel Programming" challenge – despite the "considerable resources already devoted by Microsoft and Intel" in this matter.

Did the so-generous taxpayer receive something valuable in return?

The strategic Project

This is Scala, a programming language designed as a "better Java", built on top of the Java virtual machine (JVM). Scala was created by Martin Odersky (a professor at the EPFL in Switzerland):

"The Scala research group at EPFL has won a 5 year European Research Grant of over 2.3 million Euros to tackle the "Popular Parallel Programming" challenge. This means that the Scala team will nearly double in size to pursue a truly promising way for industry to harness the parallel processing power of the ever increasing number of cores available on each chip."

The Swiss Government as well as prominent private investors joined forces to fund Scala:

Scala's design and implementation was partially supported by grants from:

- the Swiss National Fund,

- the Swiss National Competence Center for Research MICS,

- the European Framework 6 project PalCom,

- Microsoft Research,

- the Hasler Foundation.

Very few programming languages have put such an emphasis on "scalability" as Scala did (its name is the abreviation of 'scalability'). Citing "An Overview of the Scala Programming Language", Second Edition, written by the authors of Scala:

"To validate our hypotheses, Scala needs to be applied in the design of components and component systems. Only serious application by a user community can tell whether the concepts embodied in the language really help in the design of component software.

As Scala appeared in 2003 (almost 10 years ago) and was substancially funded, we can be confident that it has resolved the task. And an application server sounds like a "serious application" to validate the return on investment for the taxpayer.

I have read that the most mature of all Scala frameworks is Lift (first released in 2007) which, understandably, exposes its marvels:

"Lift is the most powerful, most secure web framework available today. There are Seven Things

that distinguish Lift from other web frameworks.

Lift applications are:

Secure

Lift apps are resistant to common vulnerabilities including many of the OWASP Top 10

Developer centric

Lift apps are fast to build, concise and easy to maintain

Designer friendly

Lift apps can be developed in a totally designer friendly way

Scalable

Lift apps are high performance and scale in the real world to handle insane traffic levels

Modular

Lift apps can benefit from, easy to integrate, pre built modules

Interactive like a desktop app

Lift's Comet support is unparalled and Lift's ajax support is super-easy and very secure

I thought G-WAN was fast, but I never imagined that someone could make a server able to "handle insane traffic levels".

Respected corporate users like eBay endorsed these claims:

"Lift is the only new framework in the last four years to offer fresh and innovative approaches to web development. It's not just some incremental improvements over the status quo, it redefines the state of the art. If you are a web developer, you should learn Lift. Even if you don't wind up using it everyday, it will change the way you approach web applications." - Michael Galpin, Developer, eBay

And the Founder of Lift has an impecable pedigree:

"David Pollak has been writing commercial software since 1977. He wrote the first real-time spreadsheet and the worlds highest performance spreadsheet engine. Since 1996, David has been using and devising web development tools. As CTO of CMP Media, David oversaw the first large -scale deployment of WebLogic. David was CTO and VPE at Cenzic, a web application security company. David has also developed numerous commercial projects in Ruby on Rails. In 2007, David founded the Lift Framework open source project."

Predictably, this is in the state of being well-prepared to be humbled by Scala that I downloaded and tested Lift (which logo is a rocket, by the way).

Testing the Scala "Lift" framework

After vainly searching for the /static folder where the Lift welcome page invited me to to put the usual 100-byte test file, I dicovered that it was located in... the source code tree, under /lift/scala_29/lift_basic/src/main/webapp/static. I agreed silently that "insane traffic levels" would be allowed to come at the price of such a lesser convenience and a few documentation gaps.

Then, I did some warm-up for the JVM in the Internet Browser and I started the usual ab.c test, with the weighttp client running 1 million of HTTP requests 10 times per concurrency step, all this on the [1 - 1,000] concurrency range.

After 10 minutes, the ab.c output was still blank:

=============================================================================== G-WAN ab.c ApacheBench wrapper, http://gwan.ch/source/ab.c ------------------------------------------------------------------------------- Machine: 1 x 6-Core CPU(s) Intel(R) Xeon(R) CPU W3680 @ 3.33GHz RAM: 5.08/7.79 (Free/Total, in GB) Linux x86_64 v#51-Ubuntu SMP Wed Sep 26 21:33:09 UTC 2012 3.2.0-32-generic Ubuntu 12.04.1 LTS \n \l > Collecting CPU/RAM stats for server 'java': 1 process(es) pid[0]:4538 RAM: 539.93 MB weighttp -n 1000000 -c [0-1000 step:10] -t 6 -k "http://127.0.0.1:8080/static/100.html" _

So I had a look at the Lift server:

./sbt

[info] Loading project definition from /opt/lift/scala_29/lift_basic/project

[info] Set current project to Lift 2.5 starter template (in build file:/opt/lift/scala_29/lift_basic/)

>

> container:start

[info] jetty-8.1.7.v20120910

[info] NO JSP Support for /, did not find org.apache.jasper.servlet.JspServlet

[info] started o.e.j.w.WebAppContext{/,[file:/opt/lift/scala_29/lift_basic/src/main/webapp/]}

[info] started o.e.j.w.WebAppContext{/,[file:/opt/lift/scala_29/lift_basic/src/main/webapp/]}

[info] Started SelectChannelConnector@0.0.0.0:8080

[success] Total time: 17 s, completed Oct 8, 2012 9:48:44 AM

> Exception in thread "pool-9-thread-4" java.lang.OutOfMemoryError: GC overhead limit exceeded

...

Lift has crashed a dozen times in different threads like in the trace above and it did it with a 6 GB of RAM usage before even ending the first [1 - 1,000] "concurrency" step (using one single thread).

I tried again, without HTTP keep alives and got a similar result.

I tried again, with 10,000 requests (instead of 1 million), using the slower and single-threaded ApacheBench (instead of the fast multi-threaded Weighttp), and got the following results for 1/10/100/1000 clients:

ab -c 1 -n 10000 -k "http://127.0.0.1:8080/static/100.html" Concurrency Level: 1 Time taken for tests: 27.559 seconds Complete requests: 10000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 10000 Total transferred: 6969418 bytes HTML transferred: 3430000 bytes Requests per second: 362.86 [#/sec] (mean) Time per request: 2.756 [ms] (mean) Time per request: 2.756 [ms] (mean, across all concurrent requests) Transfer rate: 246.96 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 0 0.0 0 0 Processing: 1 3 11.8 2 848 Waiting: 1 3 11.8 2 847 Total: 1 3 11.8 2 848 Percentage of the requests served within a certain time (ms) 50% 2 66% 3 75% 3 80% 3 90% 4 95% 5 98% 6 99% 7 100% 848 (longest request) --------------------------------------------------------------------------- ab -c 10 -n 10000 -k "http://127.0.0.1:8080/static/100.html" Concurrency Level: 10 Time taken for tests: 5.557 seconds Complete requests: 10000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 10000 Total transferred: 6969258 bytes HTML transferred: 3430000 bytes Requests per second: 1799.68 [#/sec] (mean) Time per request: 5.557 [ms] (mean) Time per request: 0.556 [ms] (mean, across all concurrent requests) Transfer rate: 1224.85 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 0 0.0 0 1 Processing: 1 6 45.7 2 1027 Waiting: 1 6 45.7 2 1027 Total: 1 6 45.7 2 1027 Percentage of the requests served within a certain time (ms) 50% 2 66% 3 75% 3 80% 3 90% 5 95% 8 98% 20 99% 30 100% 1027 (longest request) --------------------------------------------------------------------------- ab -c 100 -n 10000 -k "http://127.0.0.1:8080/static/100.html" Concurrency Level: 100 Time taken for tests: 69.004 seconds Complete requests: 10000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 10000 Total transferred: 6969469 bytes HTML transferred: 3430000 bytes Requests per second: 144.92 [#/sec] (mean) Time per request: 690.043 [ms] (mean) Time per request: 6.900 [ms] (mean, across all concurrent requests) Transfer rate: 98.63 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 3 52.8 0 1000 Processing: 1 671 1175.2 38 7848 Waiting: 1 671 1175.2 38 7848 Total: 1 674 1179.8 38 7848 Percentage of the requests served within a certain time (ms) 50% 38 66% 53 75% 1558 80% 1757 90% 2536 95% 3557 98% 4108 99% 4213 100% 7848 (longest request) --------------------------------------------------------------------------- ab -c 1000 -n 10000 -k "http://127.0.0.1:8080/static/100.html" Benchmarking 127.0.0.1 (be patient) apr_socket_recv: Connection timed out (110) Total of 942 requests completed

The latency was a disaster, performance was incredibly low (G-WAN flies at 850,000 requests per second on the same 6-Core machine) and scalability was just inexistent, Lift dying at concurrency 1,000.

Wondering if Lift was designed to work without HTTP keep alives, I tried again but without more luck:

Requests/sec

-------------------------------------------

Concurreny /w HTTP keep alives /wo HTTP keep alives

---------- ------------------- --------------------

1 362.86 292.31

10 1799.68 1084.20

100 144.92 21.34

1,000 time-out 15.73

At this stage, I was almost certain that Lift performed so poorly because I was doing something wrong so I looked for assistance.

I sent an email to the email address listed on their web site: s.a.l.e.s.@liftweb.com (without the dots) but Google Groups rejected my mail by classifying it as "bulk emails" (this never happened before and I certainly never sent "bulk emails" to anyone).

Then, Alex (from EON Inc) told me that he knows David. Having his email, I asked him how to run Lift for a benchmark. He replied that I should run Lift in "production mode", citing two links (1, 2) and offering consulting services to further discuss this subject if that was needed.

Some Googling later, I found that running ./sbt -Drun.mode=production would do the job. Then, I restarted ab.c as well as Lift ...which seemed to be willing to run even slower than before. Inspecting the log I saw:

Service request (GET) /static/100.html returned 200, took 16280 Milliseconds

Service request (GET) /static/100.html returned 200, took 26378 Milliseconds

Service request (GET) /static/100.html returned 200, took 23115 Milliseconds

Service request (GET) /static/100.html returned 200, took 10098 Milliseconds

Service request (GET) /static/100.html returned 200, took 25690 Milliseconds

...

Wow. Between 10 and 26 seconds to serve a 100-byte static file?

Is it the whole story? Or should one pay David's consulting fees for the privilege of merely being able to test Lift?

Wondering if it made sense to expect anything from a Scala server, I searched for benchmarks made by people who know better than me. And I found a paper made by Jesse Lingeman and Max Salzberg of the New-York University:

Using Scala to Create a Simple High Performance Webserver

[...]

In testing, our simple server performed admirably. The server was tested on the class's machine,

Stratus-4-11 (8 cores).

The final version of the class's server was able to handle between 2,200 and 2,300 connections

per second for ten seconds (22,000-23,000 connections total) with 8 threads on the testing machine.

Ahem. Finally I might not have been wrong: my test on a 6-Core CPU gave results that, if not really "admirable", were consistent with the NYU test on a 8-Core machine.

There's probably no better way to do that than by testing the official Scala stack from Typesafe (Play! uses Scala, Akka, and Netty). Quoting the Web site:

"the Typesafe Stack is a modern software platform that makes it easy for developers to build scalable software applications in Java and Scala. It combines the Scala programming language, Akka middleware, Play! web framework, and robust developer tools".

"Typesafe was founded in 2011 by the creators of the Scala programming language and Akka middleware, who joined forces to create a modern software platform for the era of multicore hardware and cloud computing workloads."

"Typesafe provides training, consulting, commercial support, maintenance, and operations tools via the Typesafe Subscription (which grants access to: certified builds, monitoring tools, long-term maintenance with binary compatible updates, production support (SLA), certified hotfixes, developer support (SLA), in put on future release roadmap)."

"For pricing information and sales inquiries, please contact us."

Typesafe co-founder and Scala Creator Martin Odersky (the EPFL professor) is also Chairman and Chief Architect. The company has been financed by Greylock Partners, Shasta Ventures, and Juniper Networks.

Testing the Scala "Play!" framework

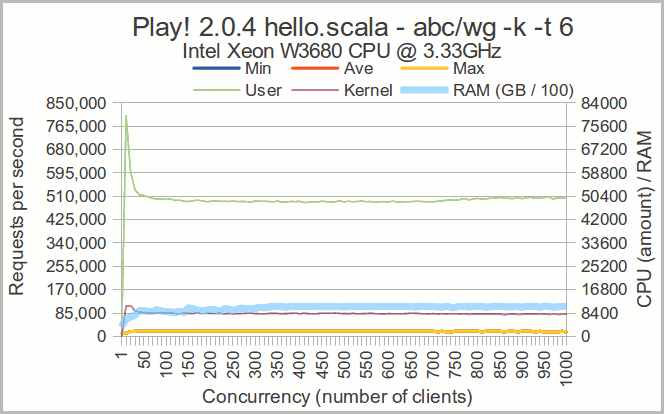

I first naively installed and run the Play! framework, and got abominable results (it took more than 3 hours for Play! to do what G-WAN does in less than 3 minutes) with a very low performance and a huge RAM and CPU resources usage:

===============================================================================

G-WAN ab.c ApacheBench wrapper, http://gwan.ch/source/ab.c

-------------------------------------------------------------------------------

Machine: 1 x 6-Core CPU(s) Intel(R) Xeon(R) CPU W3680 @ 3.33GHz

RAM: 4.03/7.79 (Free/Total, in GB)

Linux x86_64 v#51-Ubuntu SMP Wed Sep 26 21:33:09 UTC 2012 3.2.0-32-generic

Ubuntu 12.04.1 LTS \n \l

> Collecting CPU/RAM stats for server 'java': 1 process(es)

pid[0]:7284 RAM: 805.66 MB

weighttp -n 1000000 -c [0-1000 step:10] -t 6 -k "http://127.0.0.1:9000/hello"

Client Requests per second CPU

----------- ------------------------------- ---------------- -------

Concurrency min ave max user kernel MB RAM

----------- --------- --------- --------- ------- ------- -------

1, 745, 745, 745, 6482, 518, 806.87

10, 719, 719, 719, 182963, 81475, 939.62

20, 709, 709, 709, 186608, 85022, 817.80

30, 722, 722, 722, 190416, 86533, 749.65

40, 729, 729, 729, 189018, 84158, 756.14

50, 733, 733, 733, 190576, 82326, 755.19

60, 735, 735, 735, 193033, 80538, 757.38

70, 771, 771, 771, 192273, 79381, 758.43

80, 755, 755, 755, 183211, 75828, 759.13

90, 749, 749, 749, 187958, 76837, 759.87

100, 741, 741, 741, 189330, 76818, 767.09

After a fair amount of time, reading the prose of desperate Play! 2 users that complained about the performance loss as compared to version 1 (implemented in Java rather than in Scala), I found that (again) a configuration options should be added to the application.conf file to switch from DEV to PROD mode.

Adding insult to injury, like for the Lift README file, the default Play! conf file which listed commented options for 'secret keys', 'databases' and 'logging', carefully omitted any reference to the 'prod-mode' that I have had to create (rather than to just uncomment):

application.mode=prod %prod.application.mode=prod

Then, it took me a significant amount of time to discover that, to enable the PROD configuration option, the Play! application was supposed to be invoked in a completely different manner to take the PROD option into account:

The following superbly ignores the configuration file and runs the DEV mode: $ play run hello To enable to PROD mode you rather have to use the following (intuitive) syntax: $ play start --%prod

This time the damn thing started in 'production mode', and, indeed, it worked 14 times faster – bordering with the 19k requests/sec – fast enough to chart the not-so-glorious results (the painful exercise lasted 90 minutes, with an even higher RAM and CPU resources usage than during my initial attempt):

Conclusion

The 5,000 requests per second dips visible after concurrency 740 are due to an uninterrupted flow of connection time-outs which gow as concurrency grows.

As compared to Java servers tested earlier, the CPU & RAM scale had to be multiplied by 14 to cope with the humungus resource usage – more than 1 GB of RAM for a simple "hello world".

While the Play! frameword did not die, it is clearly a remarkably efficient way to waste money – and it will run only on high-end many-core servers.

Clearly, the authors of the Scala-based Lift and Play! frameworks as well as the High-Performance Webserver of the NY University use the terms "performance" and "scalability" without respect for what other JVM servers deliver (even the venerable IBM Apache Tomcat is massively faster).

But at the same time they were not shy to use hype such as "insane traffic levels" and "performed admirably" – an attitude that falls short of any scientific standard.

Given their profile, and the profile of those who endorse them, these declarations might have lured more than a few persons.

And I did not really find the experience "super-easy" nor "user-friendly", a couple of recurring claims of those Scala frameworks.

Using Scala client-side

Reading the text above, a Scala developer remarked:

"Note that Play! is not intended to be an app server. it is the Scala/Java equivalent of Ruby on Rails or Python's Django: A high-productivity web application development framework."

Making Scala (really) Scale, without Googling, without configuration, without secret incantations (nor the companion consulting fees)

As established Java servers like IBM Apache Tomcat reach 160,000 requests/sec, we have seen that Lift, considered by many as the best Scala application server, does not scale (at all). An academic Scala server made by the NY University does not perform any better. And the Play! framework, while having the great advantage of working, does not perform and does not scale.

By comparison, we have seen that Java scales much better than Scala as a server-side technology. This conclusion does not precludes the (potential) inherent qualities of the Scala language itself (like its syntax, the design of its API, or the concepts it illustrates). It's just that the current state of the Scala servers – despite the obvious inherant relevance of an application server to address the Parallel Programming challenge – does not reflect the true value of the innovations promoted by Scala.

And before you ask, the JVM is not the problem: (a) Java servers seem to be unaffected and (b) another Scala implementation based on the .NET platform does no better.

Since the conceptual stage is completed, what remains missing for Scala to scale is... a better execution. It is unfortunate that this option has been flatly ignored, wasting precious time and resources.

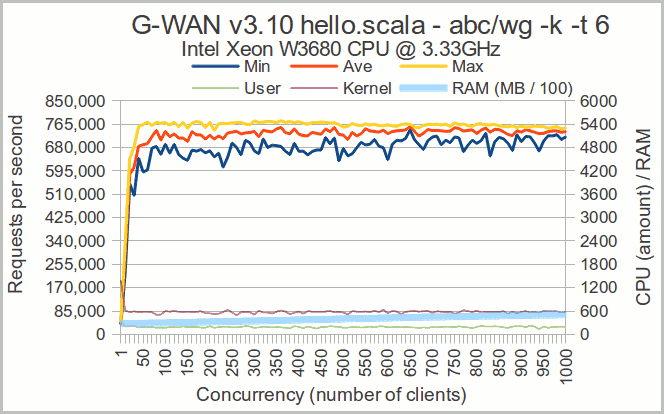

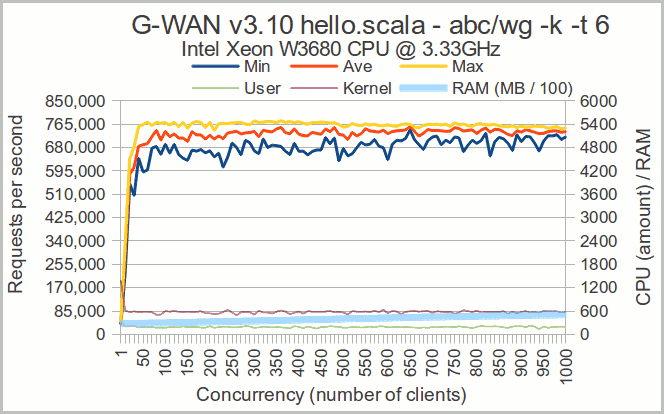

But since G-WAN supports both Java and Scala scripts as well as (tangible) high levels of concurrency, there's a chance for people to see if Scala is a "better Java" and therefore a credible server-side language – the very place where scalability matters:

G-WAN hello.scala

The total CPU usage is very low and Scala behaves decently.

Performances are quite good and scalability is pretty stable as concurreny is growing.

As always with the JVM, the memory usage is rather high, but G-WAN keeps it at a reasonable level despite the load.

Scala is not impeding this "hello World", the computational load hits only the G-WAN Web server which loaded the JVM to run Scala.

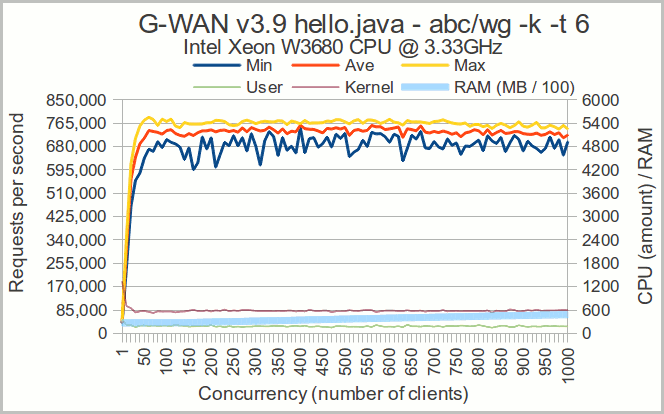

G-WAN hello.java

This test was done last month. Scala and Java seem to perform very closely, at least for such a "light" computational load.

And the JVM – when used properly – does not suffer in the exercise.

G-WAN is the only Web application server that makes Java and Scala perform and scale so well.

And G-WAN does this whatever the programming language: remember that last month we also tested C# / Mono which goes nicely, see below.

G-WAN hello.cs

The time where people claimed that "G-WAN is faster because it relies on C scripts" is clearly behind us.

All what was needed is for G-WAN to serve other programming languages.

G-WAN C scripts still perform better than Java, Scala or C#, but this is only because they rely on G-WAN's runtime which is clearly performing and scaling better than the runtimes used by the JVM or by Mono.

This should be obvious to all now.

Finally Research can now use a decent server to experiment concurrency with Scala. Quoting the European Commission again:

The the single most important problem facing the global IT industry has been resolved. There is a no more risk that the underlying business model, in which hardware performance developments enable improved software which drives purchases of new hardware, will be broken. This ambitious but coherent attempt to address the Parallel Programming challenge has been successful. Consequently, this work is of enormous value, both intellectually and industrially.

It's called G-WAN (and it has never received funding or subsidies).