Blog: Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

› HOW-TO: Load-Balancing – Best Ways Explained, DNS Configuration Example

Many among us believe that they know the answers... but some might learn something and save a lot of money. First, let's review what problems load-balancing is expected to resolve, and what features are added as a bonus:

- scability, distributing the load to backend servers,

- fail-safety, continue despite backend maintenance,

- better performance, lower latency (cache, pick fit backend),

- better security, hiding and protecting backend servers,

- heath checks of backend servers (pings, response time),

- offloading: TLS termination, caching and compression.

There are certainly vendors offering more extra-bonus features, but let's focus on the main purpose of a load-balancer reverse-proxy.

1. scalability: serving many clients, most of them idle (pausing to read contents).

› What about the Zig programming language?

A friend sent me this Youtube video. I have found this small article interesting (and Wikipedia claims Zig was created in 2016) so I wondered what prevented it from making progress. I have watched the video for an hour (more than half of the whole thing).

First, like many others before it, Zig claims to be "better than C". So we will go through its claims and my view on their value.

Second, the founder seems to have a passion for performance, but their project goals might need a better defined focus: beyond getting paid to use and re-write existing tools, what are they really trying to achieve – and where is the value for users?

Is it worth spending time on it? If not, why?

I will try to address all these points in this article.

› G-WAN's Value: 450x Faster, and C/C++/JS/PHP are Legal again ("memory-safe")

Does security finally matter? PHP and JS (written in C/C++ and not "memory-safe") are endangered by an executive order:

US President Joe Biden's administration wants software developers to use memory-safe programming languages and ditch vulnerable ones like C and C++.

Only Rust (funded by the GAFAM) would be allowed (due to international regulations) despite Rust created with C/C++, Rust not being "memory-safe" (search for "memory"), and Rust relying on memory-unsafe syscalls written in (you guessed it)C/C++.

Under the flawed logic of ever-stretched fallacies justifying administrative transfers of wealth from the masses to a few ever-growing industry players, this ban should include everything written in C/C++ (Rust, Windows, OSX, Linux, Android, all Web browsers, Java, JS, PHP, OpenSSL, NGINX, etc.) – and almost everything written by everyone for the past 50 years.

Only G-WAN+SLIMalloc have escaped this trap: G-WAN and its servlets are "memory-safe", an objective security criteria.

The number of vulnerabilities is also an objective code-quality indicator (from the U.S. gov. database of vulnerabilities):

NGINX: 248 vulnerabilities (since 2004, click the link for the current count of CVEs, Common Vulnerabilities and Exposures)

G-WAN: 0 vulnerabilities (since 2009)

This is despite G-WAN JIT scripted servlets (since 2009) which immensely enlarge the surface of vulnerability as compared to NGINX, that only started recently to add basic scripting to generate dynamic contents (via slow IPC/inter-process communication instead of loading the language runtimes like G-WAN – which is much faster, but far more difficult to do safely).

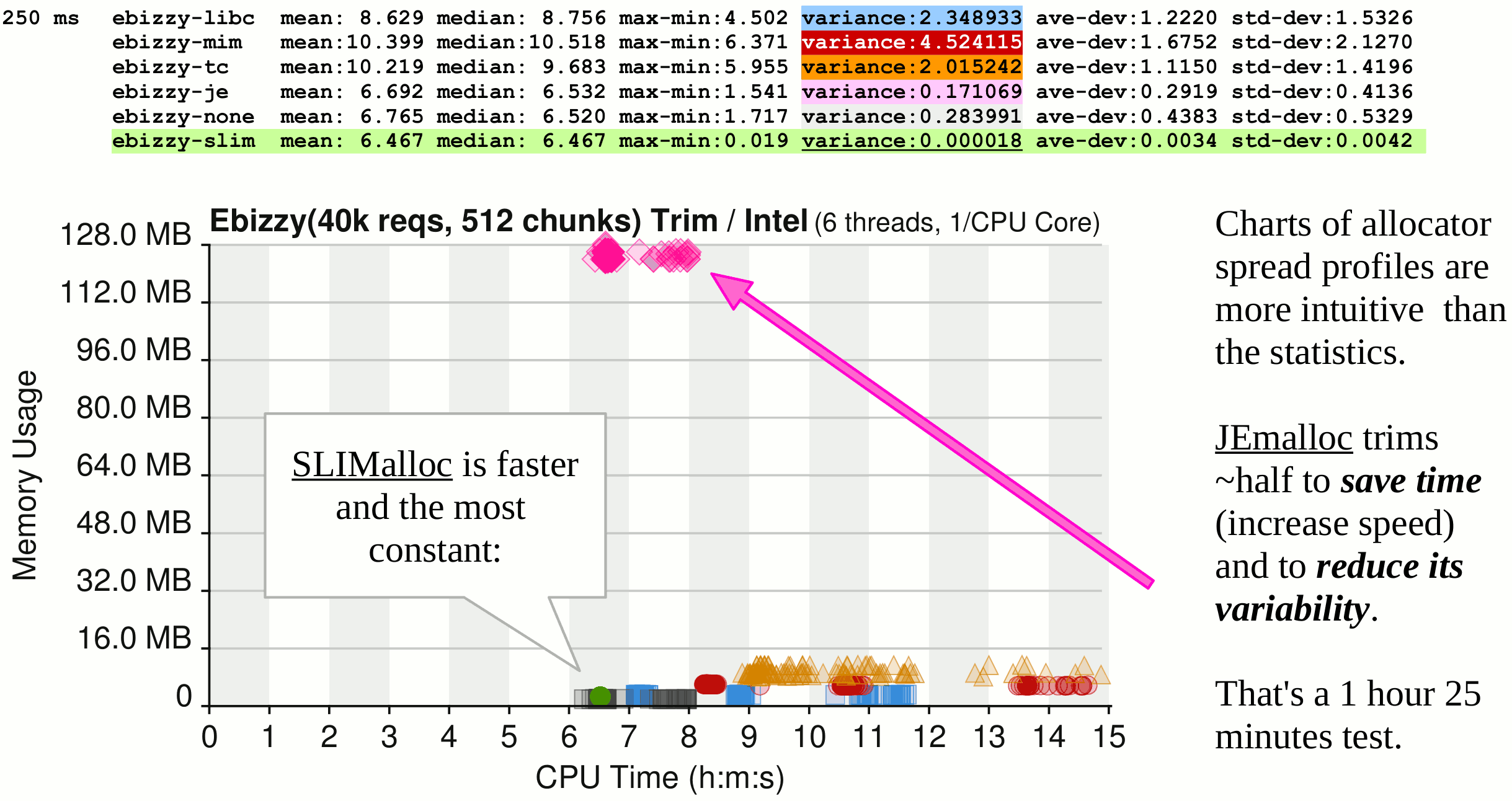

That was for 2009-2020 when G-WAN and NGINX relied on the same GLibC that SLIMalloc has proved, in graphic details, so unsafe (yet, surprisingly, a bit safer than Microsoft MIMalloc, Google TCmalloc, and Facebook/Meta JEmalloc which, don't ask me why, neglect to do the very basic checks done by the... 38-year old GLibC).

A clear past CVE record is a notable feat for a Web Application Server, but adding some sustainable certainty is certainly better:

SLIMalloc (a memory allocator) secures G-WAN, its servlets, and the loaded languages runtimes and libraries by:

(1) not introducing memory-allocation vulnerabilities (unlike all others),

(2) checking if accessed memory is under/over-written (all others don't bother),

(3) blocking and reporting memory-violations – without crashing the program (yet another exclusivity).

SLIMalloc (2020-2023) makes G-WAN safer than NGINX – and even safer than the so-called "memory-safe" languages (including Rust, which itself already had memory-safety vulnerabilities) because, unlike SLIMalloc, Rust flatly ignores the memory-violations originating from the OS (usermode and kernel) and third-party libraries... written in C/C++.

This is G-WAN's objective, heavily tested security published on ResearchGate – a clear improvement of the "State of the Art":

Now G-WAN's security has been explained, let's focus on objective reproducible performance. And, to make sure that G-WAN would not be criticized for having done cherry-picking (like others), we have used wrk2, a slower, more accurate version of the wrk benchmark written by... the NGINX team.

Why is wrk2 any better than wrk to accurately measure performance?

wrk sends a new request only after it has handled a server reply. By not tracking its own request rate, wrk fails to report slow, high-latency server replies. This is what the wrk2 author has properly named wrk client and NGINX server's "Coordinated Omission". Servers can't reply faster than the speed at which clients send their requests, and all the clients I have seen are G-WAN's bottleneck.

With G-WAN v17.2.7, wrk requires days to run a single "wrk -t10k -c10k http://127.0.0.1/100.html" test run... because wrk is unable to swiftly process the 242m G-WAN RPS.

The same NGINX v1.24.0 test runs in 10 seconds because wrk can handle the tiny 550k NGINX RPS on time. With such a tiny number of concurrent server replies, the slow wrk client does not need days.

In contrast, wrk2 sends requests at the user-supplied constant rate to the server (which will fail with I/O errors when overwhelmed). And wrk2 calibrates threads for 10 seconds (versus wrk's 0.5 second). The result is slower RPS scores than wrk, albeit more correct RPS and latency reports.

Since wrk2 sends requests at a constant-rate (unlike wrk which waits for having handled server replies to send new requests), wrk2 will stop the test after 10-second, not days – even with G-WAN (which is much, much faster than wrk and wrk2).

To test a 3-9 Watts MiniPC with a 4-Core ARM CPU and 16 GB RAM, wrk and wrk2 proved inadequate (either using too much RAM and agonizingly slow, or just lacking any real concurency) and therefore always G-WAN's bottleneck.

And beyond their asthmatic speed with high concurrencies, the wrk and wrk2 gargantuan memory usage (beheaded by the kernel OOM kill-switch) prevents from making tests on high concurrencies, simply because most machines (like this 16 GB MiniPC) don't have enough RAM! To illustrate the problem, we compared 2 ways of using wrk2, on our powerful i9-13900K PC with 192 GB RAM:

In our prior tests, wrk2 used 1 thread per connection to get more concurrency (result: G-WAN 281m RPS, NGINX 944k RPS):

wrk2 -c1 -t1 -R500m "http://127.0.0.1:80/100.html" wrk2 -c100 -t100 -R500m "http://127.0.0.1/100.html" wrk2 -c1k -t1k -R500m "http://127.0.0.1/100.html" wrk2 -c10k -t10k -R500m "http://127.0.0.1/100.html" wrk2 -c20k -t20k -R500m "http://127.0.0.1/100.html" wrk2 -c30k -t30k -R500m "http://127.0.0.1/100.html" wrk2 -c40k -t40k -R500m "http://127.0.0.1/100.html" (wrk2 uses 190 GB RAM, G-WAN 668 MB)

But in these tests targeting wrk2, 1 thread per Core was used, as NGINX does it (result: G-WAN 2m RPS, NGINX 1m RPS):

wrk2 -c1 -t1 -R500m "http://127.0.0.1/100.html" wrk2 -c100 -t32 -R500m "http://127.0.0.1/100.html" wrk2 -c1k -t32 -R500m "http://127.0.0.1/100.html" wrk2 -c10k -t32 -R500m "http://127.0.0.1/100.html" wrk2 -c20k -t32 -R500m "http://127.0.0.1/100.html" wrk2 -c30k -t32 -R500m "http://127.0.0.1/100.html" (wrk2 uses little RAM, at low concurencies) ... wrk2 -c100k -t32 -R500m "http://127.0.0.1:80/100.html"

Since G-WAN reached 281m RPS (NGINX 944k) with wrk2 using many threads, it is obvious that the G-WAN bottleneck is wrk2:

G-WAN cannot serve requests faster than sent by the wrk2 client.

This also explains why NGINX is not able to handle high concurrencies:

|

NGINX forces people to wait for their turn (in each per-process/thread event-based queue, only one person can embark at any given time). The more CPU Cores and processes/threads, the higher the CPU and RAM overheads – and the wait times for the people queuing to embark. |

G-WAN lets tens of thousands of people travel together (each thread lets hundreds of thousands of people make a step forward altogether). The more CPU Cores and threads, the lower the CPU and RAM overheads – and the latency between steps. |

These tests have demonstrated that G-WAN is much faster (and using less RAM at a given concurrency-level) than both wrk2 and NGINX (based on the same flawed design and implementation)... but also that a G-WAN-based benchmark tool is required to report its real performance.

That's how G-WAN performance is objectively measured and reproduced (scientists create and use benchmarks for a reason). Using the wrk2 NGINX testing tool on localhost or a LAN is "not the real world": it lacks the large Internet network latencies and users reading a page before visiting another page.

So, if you let G-WAN using Internet-sized timeouts then it will handle idle connections just like NGINX (which also has to use proper timeout values). Claiming that NGINX is able to stack more idle connections than G-WAN is false – unless you set NGINX with a large timeout and G-WAN with a small one, to force it close idle connections earlier.

When vendors revert to fallacies (after far more informative comparisons were furiously censored), that's not a good sign:

- NGINX "capability to handle many connections simultaneously" (944k for NGINX versus 281m for G-WAN...)

- "NGINX has a strong security track record" (with hundreds of vulnerabilities versus... zero vulnerabilities for G-WAN!)

- the "steeper [G-WAN] learning curve" (despite a zero-conf. G-WAN – compare that to the arcane NGINX configuration files)

- "G-WAN is a high-performance web server focusing on C scripts" (C and 17 other programming languages: JS, C#, PHP, Java...)

- "G-WAN primarily focuses on C/C++, but with certain tweaks, other languages might be executed" (the "tweaks" are just to install the language runtimes – then you can "edit and play" G-WAN servlets without any configuration!)

NGINX lacks G-WAN's (1) "edit and play" servlets in 18 programming languages and (2) "edit and play" contents/protocol handlers. So G-WAN developers can port NGINX modules to G-WAN – but NGINX has yet to offer anything close to G-WAN's "edit and play" servlets and handlers.

That was a long introduction – as I have for the first time (in my blog) presented the technical arguments before the question.

So, what was the question?

› How to Choose a Multicore CPU in 2025 – and How Much it will Matter

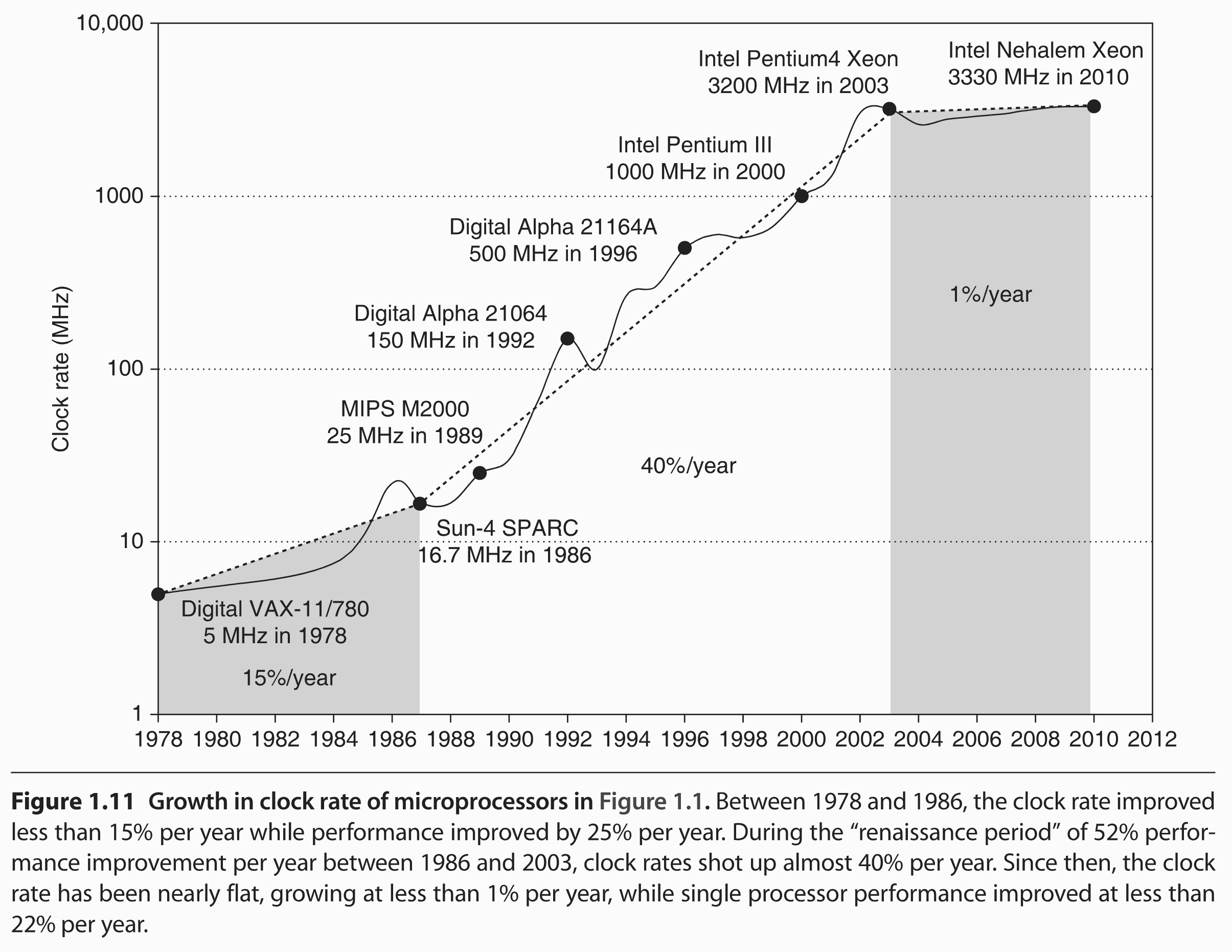

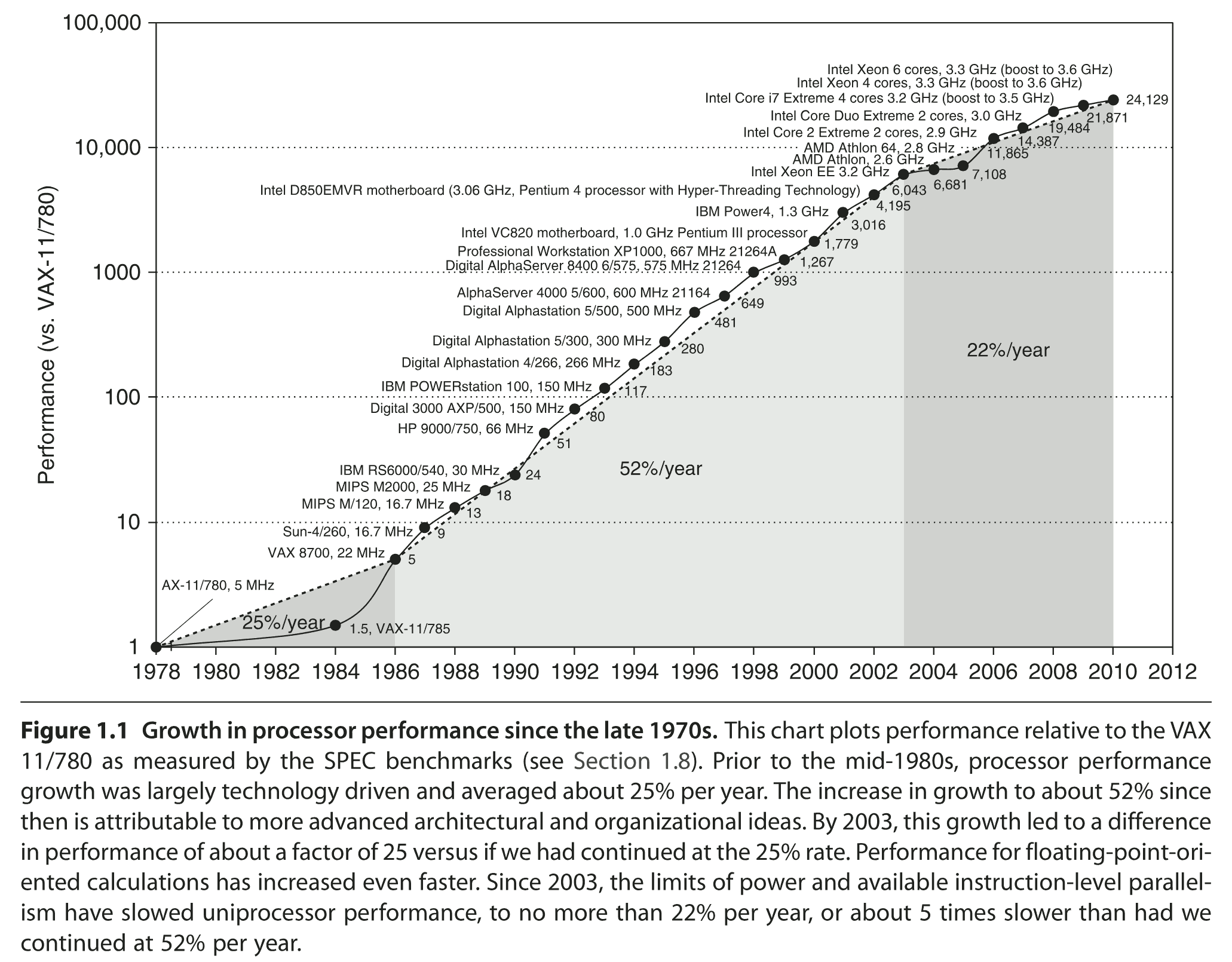

When I was young, CPUs were single-core, and the CPU frequency was an indicator of its speed. As the frequency was doubling every 2 years, the code execution was also doubling (sometimes a bit more thanks to new CPU instructions). The faster the CPU frequency, the better – things were simple:

After year 2001 (and the CPU frequency halt), things became more difficult because CPU vendors started to market multicore CPUs under names that were no longer related to their capacity.

Today, larger reference numbers do not necessarily imply better performance: for example, there are Intel i5 or i7 CPUs that are faster than some i9 or Xeon CPUs.

CPU frequency remains a relevant criteria – but since CPU frequencies no longer grow beyond the 5-6 GHz limit there are other important metrics to consider.

Higher clock speeds imply higher energy consumption, that's true.

But CPU "governors" (powersave vs. performance) and a user-defined variable clock-speed range (from 800 MHz to 5500 MHz for my i9 CPU) let you decide how much your CPU will consume (so a larger range is more desirable).

In 2009-2024, G-WAN was benchmarked on the 2008 Intel Xeon 6-Core that tops the charts on the left.

Purchased in 2024, my 2022 Intel Core i9 is 8.36 times faster overall, and its processing speed per Core (or thread) is 4.07 times higher than for the 2008 Xeon.

So the problem that everyone is facing is... how to navigate the hundreds of CPU references to pick the CPU you need?

Is it better to have many Cores – or to have less CPU Cores and a higher CPU frequency? Are there other criteria to take into account?

For Desktop machines, this maze is further obscured by the fact that PC vendors may forcibly add a (high-margin) graphic card... that is redundant with your CPU features (my i9 CPU embeds an "Intel Corporation Raptor Lake-S GT1 [UHD Graphics 770]").

Unless you need a GPU (my i9 runs 3D video games effortlessly), a PCI 3D graphic card is pointlessly consuming a lot of power.

We will resolve these questions, explain what are the gains, and how to reduce your purchase and operating costs... showing also how a server "status" page may help.

› G-WAN HTTP server @ 1.05 Tbps is 4.1 faster than mere TCP servers @ 261 Gbps

The same engineer that told me about WRK2 in 2024, has later suggested TCPkali to benchmark G-WAN in 2025.

While both tools are relevant, they have limits that G-WAN has revealed.

Why? Just because it was the only server able to push WRK2 and TCPkali beyond their comfort zone.

And since these limits may generate absurd values, it makes sense to find how they can happen in order to recognize and exclude them.

This is also pertinent in the real-life, that is, if you care about diagnosis, monitoring, or capacity planning.

For example, if you blindly believe WRK2, you will (incorrectly) state that:

– "G-WAN requests have a maximum latency of 3.96 seconds... in a 5.5m HTTP requests G-WAN test lasting 32.18 milliseconds."

(obviously, there's no possible way to have any request last more than 32.18 milliseconds in a 32.18 milliseconds test)

And if you blindly believe TCPkali, you will (incorrectly) state that:

– "G-WAN HTTP/1.1 is much faster than the TCP protocol (despite G-WAN doing HTTP parsing, validation and formatting)."

(obviously, there's no possible way to have any TCP transfers go beyond the real TCP bandwidth limit of the system)

Of course, both statements are ridiculous and can only be false. But it's very easy for the distracted to be tricked. In this two-step post, we will explain how such obviously wrong numbers can be reported by otherwise trustworthy tools.

And comparing HTTP to TCP performance demonstrates that G-WAN is a so much better server that it is more than 4x faster than mere TCP servers. With 25.6 Tbps connectivity already available, relying on obsolete servers like NGINX wastes a lot of money.

› How G-WAN went from 850k RPS (in 2012) to 242m RPS (in 2025)

Wonder why China leads Big-Tech? U.S. and European products are all the same: not performing, nor safe, not innovating – yet a few (financially-dominant but technically mediocre) vendors get all the business. Copy & paste has replaced R&D (for the sake of infinite debt-financing growth). Jobs are disappearing, and the ones that remain are boring and degrading. I will show here how we all can (and therefore should) do much better.

After 45 years of engineering, I have seen a lot of organizations, platforms, people and programs. I always felt there was a fundamental difference between people (and therefore their speeches and works). I believe that it explains how G-WAN has evolved while all others have stagnated: NGINX 2025 is slower (on 8x faster 2022 CPUs) than G-WAN 2009 (on 8x slower 2008 CPUs)!

In 2009, I wrote G-WAN because none of the available HTTP servers were matching my needs. When I had something to publish (faster, simpler, more reliable) I shared my work as a freeware with my views about what was (and still is) wrong elsewhere.

In 2025, G-WAN (242m RPS) is 453 times faster than NGINX (555k RPS) with 10k users, an uncached 100-byte file, on an Intel Core i9 CPU. With such energy and hardware specs, my $1.5k PC is a Cloud.

In 2025, Wikipedia states that Google uses 2.5 million servers to serve an estimated 40m searches per second – multiplied by 5 as "4 parts responds to a part of the request, and the GWS assembles their responses and serves the final response to the user" said Google in a 2003 report.

200m RPS (5 * 40m RPS) served by 2.5m servers (200m / 2.5m = 80 RPS per server. The potential energy and hardware costs are gigantic, hence the GAFAM now buying nuclear plants to cope with the increasing traffic and operating costs!

Who needs scalability? Startups? Internet, Phone & TV networks? Data centers, Web hosting, SaaS and Could operators? Video streaming and Payment platforms? The GAFAM (operating systems, Web browsers, search engines, social networks)? Government administrations? Stock exchanges? Clearing houses? Banks?

Already we can see that some of these players have an incentive at promoting inefficiency (and censoring efficiency) to preserve (or grow) their revenues – at the expenses of their customers (the largest of all being governments, that is, the taxpayer).

If you can't (or don't want to) buy nuclear plants, there's G-WAN.

› What makes "Disruptions" Successful?

The short answer is "time". If an innovation is really useful then everybody will eventually use it, one day. But well-known innovations may stay dormant during centuries – as long that people don't believe that doing such a thing is possible. Let's see how this happens, how different actors play in favor to (or against) disruptions, and why.

"An invasion of armies can be resisted, but not an idea whose time has come."

– Victor Hugo

Mail is a good example. We all know that it started with guys running from one point to another to deliver a message. Then horses and boats transported letters, and we got railways the telegraph, telecopies and the Internet.

But very little among us know how much innovations have to wait until they are enforced by the authorities, with enough clout to leave the state of a mere curiosity. For example, in the 18th century, French king Louis XV replaced religious, academic, and other postal services by the Royal Post, a monopoly given to the "Black Cabinet" to spy on supposedly ever conspirating people.

Electricity, seen in the sky and used by Thales (600 BC), a Greek philosopher, in experiments, had to wait until 1750 to find new insights with Benjamin Franklin, a largely self-taught researcher, 'Founding Fathers of the United States of America', Pennsylvania President, several times US Minister, slaves owner, and large-scale securities speculator (his face is on 100 dollar bills).

Soon after, Alessandro Volta invented the battery in 1800, and Michael Faraday invented the electric motor in 1821. Things accelerated then with Nikola Tesla, Thomas Edison and many others who contributed to the "Second Industrial Revolution", where electricity left the status of a near-magical mysterious force.

› What makes something "scalable"?

Almost all Web servers and Database servers distributed today are "highly-scalable". Or so say the vendors because that's a keyword that end-users, media and search engines value as the promise for big savings.

And scalability indeed matters: that's the ability to perform well while concurrency grows. For a server, it means that satisfying one or 10,000 users must be done with a low latency (this is the application responsivity, also called 'user experience').

Let's consider G-WAN and the ORACLE noSQL Database.

This Demo will be been presented in the noSQL and Big Data sessions of the ORACLE Open World (OOW) 45,000-person event held in San Francisco on Sept. 30 – Oct. 4, 2012:

On the left, there is a photo of the session pitch taken by Alex.

The G-WAN presentation (the G-WAN-based PaaS) can be found here.

› What is "2D Scalability"?

If you provide a successful service, then you have to make your application(s) scale. There are two dimensions which can then be involved for scaling:

- horizontally using several machines, each of them running your application(s)

- vertically using several workers running your application(s) on each machine.

The second way of doing things, scaling vertically, was introduced in the early 2000's on commodity hardware (a decade ago). Despite multicore being now ubiquitous, from cellphones to Data Centers, network switches, TVs, planes, trains, boats, automobiles, laptops and desktops, there is still a wide gap as far as software development tools are concerned.

The question is not whether or not you will have to use parallelism (adding a dimension to the equation grants you access to exponential gains), the question is about how to do it to reduce costs. After all, you have paid for this hardware so not using its capabilities is quite a pity.

› What makes something "secure" ?

The total lack of uncertainty.

Very few things are considered "secure" because the sun can blow us all without notice, because the ground can melt in a volcanic eruption, and because each of us can die from a heart attack – at any moment.

So what makes something "secure" must be independent from the known and unkown laws of the universe – and, more generally, anything that we cannot control, like exterior conditions.

And this is not as difficult as it sounds.

› Performance vs. Scalability

It's software that makes a fast machine slow.

The difference between Performance and Scalability

For software running on a single machine, the definitions are simple:

- Performance: speed.

- Scalability: ability to perform better as more CPU Cores are involved.

Speed is obviously desirable. But in a world of parallelism (multi-Core CPUs are today's reality) scalability is mandatory: without scalability, software can only use one Core or two (even if you have 64 idle Cores begging for work).

And without performance, scalability is pointless: if you need 64 Cores to achieve what can be done with one single Core, that's a shameful waste of resources (which comes at an hefty price).

Conclusion: Performance and Scalability are (much) needed. Preferably in equal amounts (close to the theorical ideal).

› The Future of the Internet

Here, a Salt Lake City media (Salem-News has 98 Writers in 22 countries) is sharing views about the costs associated with the distribution of information.

The U.S. state of Oregon, located on the Pacific Northwest coast, hosts large datacenters (Google, Amazon, Facebook, etc.) to take advantage of cheap power (hydroelectric dams) and a climate conducive to reducing cooling costs.

Having received an invitation to provide insights about how software can contribute to making this industry sustainable on the long term despite the explosion of Internet clients, we have tried to extract from our experience a point of view rarely mentioned in purely factual studies.

While finance and equipment surely help, we explain why we believe that the human factor can play a decisive role in this picture.