Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

› One more Terabyte per second, anyone?

In the past, G-WAN was attracting web developers. Since last year, it brings CTOs that have exhausted all their options. Most of them have met increasingly interesting scalability problems – the kind that no on-the-shelves product can possibly address.

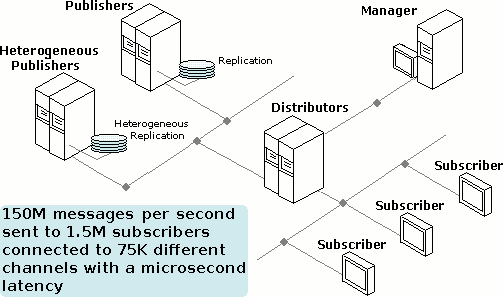

This CTO wanted to unify several different financial systems involving a total of more than 150 million messages per second.

Collected and sorted by category, priority and type of audience, all these messages had then to be reliably dispatched in real-time to more than 1.5 million users, most of them with the status of paying subscribers – the kind notoriously eye-watering expensive to disappoint.

After a couple of prior failures involving gargantuous open-source projects designed-to-scale but not-to-perform, spiraling budgets and long overdue results this CTO was ready to embrace anything that could "just do the job".

Preferably right now.

The live haystack

When you have to deal with a moving target, you must act swiftly. And if the target's weight can crunch you at the slightest moment of doubt, you also have to keep focused, and continue delivering whatever happens because storing is no longer an option (unless you are a billion-company able to design and deploy its own infrastructure, data accumulate faster than you can transfer and store it remotely).

Such a statement might look like gratuitously self-gratifying – until you start dealing with Terabytes of data per second.

From this perspective, the "Internet of Things" is going to prove wrong a lot of consultants, I.T. vendors and Cloud players relying on standard open-source projects. Not just gently wrong like we all have been used at with benign bugs that crash your spreadsheet at the worse moment. Here we are talking about being plain-deadly wrong, the way that cannot be recovered ...never ever.

And we are not even considering the dire costs of ubiquitous security issues. Lazy designs that cannot seriously scale (or last without failing) will simply disqualify almost all today's offers... and development teams (reality has this enervating property to defeat the abstract beauty of the theories exposed in academic papers).

In such gaps, the implementation is often finger-pointed, but rarely the design itself. Yet, most of the server products (either HTTP, database, or email) are not ready for BigData – and even less for the "Internet of Things".

G-WAN's flexibility, scalability and features (used here as a bank of organs) have helped us to match all these expectations, in less than a week, for a fully-functional prototype that proved to be adequate enough to serve as the final project backbone.

Then, people were paid for plugging features around this generic, distributed and truly-scalable communication platform rather than at trying the next "promising" open-source project that required months and tens of million dollars for painful consulting and integration to finally prove... unsuitable for the task.

Today's BigData infrastructure is based on obsolete technologies that will not resist tomorrow's data-crunching challenges. The sooner people realize it, the most they will get for the money they invest.

Hardware, Costs, and Lessons re-Learned

That should not come as a surprise to G-WAN authors (a 200 KB app. server supporting 17 scripted programming languages) but it's always a useful reminder: whether you have to code for embedded systems or highly-parallelized servers, effective solutions (now called "parallelized", "locality-conscious" or "cache-friendly") help performance and scalability – so much that it should be a design rule today.

These considerations allowed us to resolve this CTO's nightmare with 5 servers consuming 250 Watts each. Not bad for processing and transferring 20 Terabits of data per second.

Even better if you consider that upgrading to 100 Terabits requires 20 more servers – boring linear scalability.

DISCLAIMER: this stunt was done by seasoned professionals with hand-coded dedicated tools – don't try doing it at home!

It also helps to sketch (and preserve) a clean design. "Clean" means both versatile and rock-solid. "Clean" means easy to understand, implement and maintain. "Clean" means you won't have to change it because nothing else fits the task better. "Clean" means that bugs, if any, can only be elsewhere.

Another point worth mentioning is that data want to flow freely. Don't make lists, queues, or anything that will make it slow-down or worse: turn it into dead fat. Mere mortals can store insights extracted from data, but not this kind of data volumes.

If a newly-created design does not replace hundreds of megabytes of compiled code (and usually myriads of libraries and processes talking to each-other), then you are most certainly doing it wrong. You might disagree, of course, and even succeed to make it work – with several orders of magnitude of pointlessly inflated costs.

The "Internet of Things" are different. Or, rather, it's familiar to those who have started coding 35 years ago: count every bit you use because it will be lacking to another part of the system – like a CPU left idle because of contention and desperate for data to crunch.

Speed has its exigences. Ask anyone who has designed the shape of Mach 3+ aircrafts.