Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

G-WAN HTTP server @ 1.05 Tbps is 4.1 faster than mere TCP servers @ 261 Gbps

The same engineer that told me about WRK2 in 2024, has later suggested TCPkali to benchmark G-WAN in 2025.

While both tools are relevant, they have limits that G-WAN has revealed.

Why? Just because it was the only server able to push WRK2 and TCPkali beyond their comfort zone.

And since these limits may generate absurd values, it makes sense to find how they can happen in order to recognize and exclude them.

This is also pertinent in the real-life, that is, if you care about diagnosis, monitoring, or capacity planning.

For example, if you blindly believe WRK2, you will (incorrectly) state that:

– "G-WAN requests have a maximum latency of 3.96 seconds... in a 5.5m HTTP requests G-WAN test lasting 32.18 milliseconds."

(obviously, there's no possible way to have any request last more than 32.18 milliseconds in a 32.18 milliseconds test)

And if you blindly believe TCPkali, you will (incorrectly) state that:

– "G-WAN HTTP/1.1 is much faster than the TCP protocol (despite G-WAN doing HTTP parsing, validation and formatting)."

(obviously, there's no possible way to have any TCP transfers go beyond the real TCP bandwidth limit of the system)

Of course, both statements are ridiculous and can only be false. But it's very easy for the distracted to be tricked. In this two-step post, we will explain how such obviously wrong numbers can be reported by otherwise trustworthy tools.

And comparing HTTP to TCP performance demonstrates that G-WAN is a so much better server that it is more than 4x faster than mere TCP servers. With 25.6 Tbps connectivity already available, relying on obsolete servers like NGINX wastes a lot of money.

STEP 1 - the very tangible limits of WRK2, and NGINX

Let' start with another G-WAN WRK2 benchmark, this time with a larger static file (G-WAN uses HTTP error templates, caches them on disk on first use, and then serves them as static files in two versions, plaintext and gzipped).

Since the purpose of this test is not a side-by-side comparison with NGINX, I did not change the fact that the 404 template used by G-WAN is 4.23 times larger than NGINX's (the HTTP header fields are equivalent in both cases):

– NGINX 404 HTML page (162 bytes)

– G-WAN 404 HTML page (685 bytes, 4.23 times larger than NGINX's)

Hardware/system/application settings:

--------------------------------------------------------------------------------

- cold boot

- Intel Core i9 CPU frequency: "performance", 5500 MHz

- Ubuntu 24.04 LTS

- no Linux kernel tuning

- UFW local firewall not disabled

- client and server terminals: prlimit --pid=$$ --nofile=1048576

- NGINX v1.24.0, G-WAN v17.2.7

- gzip compression not used by WRK2 client

- best result on 3 tests for each server

NGINX: 509k RPS (189.52 MB/sec, that is, 1.48 Gbps)

--------------------------------------------------------------------------------

wrk2 -t10k -c10k -R1m "http://127.0.0.1:80/404.html"

Initialised 10000 threads in 2200 ms.

Running 10s test @ http://127.0.0.1:80/404.html

10000 threads and 10000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.67s 1.18s 5.50s 0.03%

Req/Sec -nan -nan 0.00 0.00%

6749911 requests in 11.07s, 2.05GB read

Socket errors: connect 0, read 0, write 0, timeout 453

Non-2xx or 3xx responses: 6749911

Requests/sec: 609602.18

Transfer/sec: 189.52MB

G-WAN: 172m RPS (134.72 GB/sec, that is, 1.05 Tbps)

--------------------------------------------------------------------------------

wrk2 -t10k -c10k -R1m "http://127.0.0.1:8080/404.html"

Initialised 10000 threads in 2203 ms.

Running 10s test @ http://127.0.0.1:8080/404.html

10000 threads and 10000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 659.59ms 858.07ms 3.96s 0.01%

Req/Sec -nan -nan 0.00 0.00%

5534494 requests in 32.18ms, 4.33GB read

Non-2xx or 3xx responses: 5534494

Requests/sec: 172006899.55

Transfer/sec: 134.72GB

Results:

--------------------------------------------------------------------------------

NGINX (162 bytes) vs. G-WAN (685 bytes) HTTP error 404 test:

- NGINX / WRK2 609k RPS (1.48 Gbps), timeout errors: 453

- G-WAN / WRK2 172m RPS (1.05 Tbps), 282x more RPS, 727x more data, no timeout errors

Despite a more than 4.23 times larger file, G-WAN is 282 times faster than NGINX (G-WAN delivered an almost equal number of requests, and more than twice the data, in 0.03218 seconds instead of... 11.07 seconds for NGINX):

– NGINX: 6749911 requests in 11.07s, 2.05GB read

– G-WAN: 5534494 requests in 32.18ms, 4.33GB read (less time than half the second decimal of the NGINX 11.07s)

The G-WAN maximum latency is reported by WRK2 as: 3.96s (less than NGINX's 5.50s).

But, wait, the total G-WAN execution time reported by WRK2 of 32.18ms is a 1/20th (659.59ms / 32.18ms) of the average per-request latency... with 5.5m requests! This is obviously wrong. This cannot be the G-WAN latency, this is rather the WRK2 latency:

– G-WAN: 0.03218 seconds (that is, 32.18 ms, so G-WAN is severely under-sollicited by the very slow WRK2)

– NGINX: 11.07000 seconds (lagging, trying to cope with WRK2's 10k -R1m high-concurrency... but failing with 453 timeouts)

– WRK2 : 10.00000 seconds (which started and stopped the test, trying to read as many G-WAN replies as it can)

Therefore, in reality, for this 404 page, no client had to wait more than:

– G-WAN: 0.03218 seconds (the G-WAN execution time, that is, 0.3% of the whole WRK2 execution time)

– NGINX: 5.50000 seconds (55% of the whole WRK2 execution time)

WRK2 has qualities (like the ability to stop the test at the scheduled time, unlike WRK which will take hours if not days to handle all G-WAN responses), but some WRK2 deffects remain at high concurrencies:

– WRK2, during 10 seconds, can only handle less than 0.3% of all G-WAN responses,

– WRK2 consumes 99% of the CPU while G-WAN consumes less than 1% during this test,

– WRK2 cannot report precise G-WAN statistics, overwhelmed by G-WAN's 172m RPS,

– WRK2 can report precise NGINX statistics as WRK2 can cope with NGINX's 609k RPS.

In the real world, clients only handle a bunch of connections (they don't try to act as fast as G-WAN). Therefore, they have no trouble measuring latencies because they don't have to wait for their own code to end pending tasks elsewhere.

In the real world, G-WAN would not be running on the same machine that runs all the clients, so G-WAN would enjoy all the CPU, and most probably run much faster than in the above test.

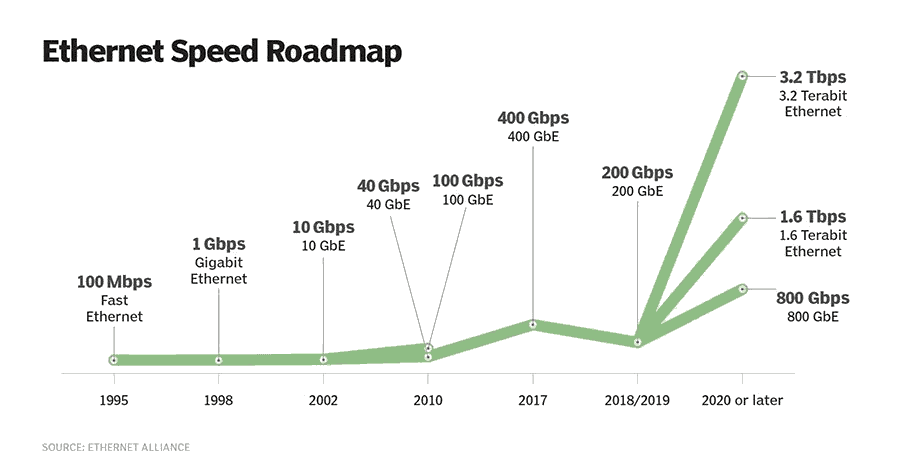

For normal users, 800 Gbps NICs are not yet available (but two 400 Gbps NICs could already be used):

1997 ... 1 Gbps Ethernet (2024 home LANs, not Clouds/data-centers) 2004 ... 10 Gbps Ethernet (the 20-year old TechEmpower speed-limit) 2010 ... 100 Gbps Ethernet (TechEmpower 'round #1' was born in 2013) 2024 ... 400 Gbps Ethernet (today's technology) 202? ... 800 Gbps Ethernet (in the works) 202? ... 1600 Gbps Ethernet (in the works)

In 2025, servers can embed several 400 Gbps PCIe Ethernet NICs to achieve Tbps transfer speeds – and G-WAN is the only HTTP server able to take advantage of today's hardware. The result will be geometric infrastructure and energy savings.

That's the pitfalls of high concurrencies. Said differently, this is a severe scalability gap (in WRK2 and NGINX) facing a program, G-WAN, that does not suffer from these limitations.

In an ideal world, new client tools should be written to cope with G-WAN's scalability – to avoid misleading the end-users with obviously-false reporting. But the required skills are scarce, and other G-WAN-based projects look more financially promising, so, unless someone funds these developments, they will not happen any time soon.

STEP 2 - the very tangible limits of nuttcp and tcpkali

Let's measure the local TCP limits to compare them to G-WAN limits (knowing that G-WAN acts as an HTTP/1.1 server doing HTTP parsing, request validation, and then HTTP formatting of its replies to client requests – a much more demanding task than merely sending constant characters on the wire, whatever the requests).

Despite being an HTTP server, G-WAN is faster than tcpkali (a TCP client and server, which quickly shows scalability limits):

G-WAN: 172m RPS (134.72 GB/sec, that is, 1.05 Tbps)

--------------------------------------------------------------------------------

wrk2 -t10k -c10k -R1m "http://127.0.0.1:8080/404.html"

Initialised 10000 threads in 2203 ms.

Running 10s test @ http://127.0.0.1:8080/404.html

10000 threads and 10000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 659.59ms 858.07ms 3.96s 0.01%

Req/Sec -nan -nan 0.00 0.00%

5534494 requests in 32.18ms, 4.33GB read

Non-2xx or 3xx responses: 5534494

Requests/sec: 172006899.55

Transfer/sec: 134.72GB

nuttcp 1 thread, ~55 Gbps:

---------------------------------------------------------

Terminal 1:

nuttcp -r

Terminal 2:

nuttcp 127.0.0.1

67894.2500 MB / 10.00 sec = 56954.1039 Mbps 99 %TX 76 %RX 0 retrans 0.13 msRTT

tcpkali 1 thread ~76 Gbps:

---------------------------------------------------------

tcpkali 127.1:2222 -l2222 -T10s -mZ -c1 -w1

WARNING: Dumb terminal (not compiled with ncurses library), expect unglorified output.

Destination: [127.0.0.1]:2222

Listen on: [0.0.0.0]:2222

Listen on: [::]:2222

Ramped up to 1 connections.

Total data sent: 93677.3 MiB (98227724565 bytes)

Total data received: 93668.8 MiB (98218868736 bytes)

Bandwidth per channel: 78566.780⇅ Mbps (9820847.5 kBps)

Aggregate bandwidth: 78563.238↓, 78570.322↑ Mbps

Packet rate estimate: 7192679.3↓, 6743847.7↑ (12↓, 45↑ TCP MSS/op)

Test duration: 10.0015 s.

tcpkali 10 threads ~261 Gbps:

---------------------------------------------------------

tcpkali 127.1:2222 -l2222 -T10s -mZ -c10 -w10

WARNING: Dumb terminal (not compiled with ncurses library), expect unglorified output.

Destination: [127.0.0.1]:2222

Listen on: [0.0.0.0]:2222

Listen on: [::]:2222

Ramped up to 10 connections.

Total data sent: 319232.2 MiB (334739214255 bytes)

Total data received: 319189.0 MiB (334693891817 bytes)

Bandwidth per channel: 26750.863⇅ Mbps (3343857.9 kBps)

Aggregate bandwidth: 267490.519↓, 267526.741↑ Mbps

Packet rate estimate: 24493603.9↓, 22962354.7↑ (12↓, 45↑ TCP MSS/op)

Test duration: 10.0099 s.

tcpkali 24 threads ~193 Gbps:

---------------------------------------------------------

tcpkali 127.1:2222 -l2222 -T10s -mZ -c24 -w24

WARNING: Dumb terminal (not compiled with ncurses library), expect unglorified output.

Destination: [127.0.0.1]:2222

Listen on: [0.0.0.0]:2222

Listen on: [::]:2222

Ramped up to 24 connections.

Total data sent: 236401.0 MiB (247884433590 bytes)

Total data received: 236301.5 MiB (247780044452 bytes)

Bandwidth per channel: 8257.371⇅ Mbps (1032171.3 kBps)

Aggregate bandwidth: 198135.160↓, 198218.634↑ Mbps

Packet rate estimate: 18142142.9↓, 17013501.4↑ (12↓, 45↑ TCP MSS/op)

Test duration: 10.0099 s.

tcpkali 32 threads ~213 Gbps:

---------------------------------------------------------

tcpkali 127.1:2222 -l2222 -T10s -mZ -c32 -w32

WARNING: Dumb terminal (not compiled with ncurses library), expect unglorified output.

Destination: [127.0.0.1]:2222

Listen on: [0.0.0.0]:2222

Listen on: [::]:2222

Ramped up to 32 connections.

Total data sent: 260941.8 MiB (273617341155 bytes)

Total data received: 260852.4 MiB (273523599228 bytes)

Bandwidth per channel: 6832.608⇅ Mbps (854076.0 kBps)

Aggregate bandwidth: 218606.002↓, 218680.922↑ Mbps

Packet rate estimate: 20015768.7↓, 18769820.5↑ (12↓, 45↑ TCP MSS/op)

Test duration: 10.0097 s.

Results:

---------------------------------------------------------

G-WAN (1.05 Tbps) with 10,000 threads is 4.1 times faster than

TCPkali (261 Gbps) with 10 threads (which performance crumbles with higher concurrencies).

These two comparative tests (using the HTTP and then the TCP protocols) demonstrate that G-WAN is a much (much) better server – to the point where G-WAN, as an HTTP server, is more than four times faster than mere TCP servers.

We have also shown that G-WAN is the only HTTP server able to take advantage of the recent 400 Gbps NICs.

But there's much more: 25.6 Tbps are already in use in some data-centers.

This lets Cloud vendors and data-centers make large, recurring energy and hardware savings while delivering higher-quality service to end-users.

If you are not getting advantage of these technologies then your company is pointlessly losing money in extra (or severely under-used) hardware – and lots of wasted energy.

A long time ago, some people already thought about the matter:

Any sufficiently advanced technology is indistinguishable from magic.