Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

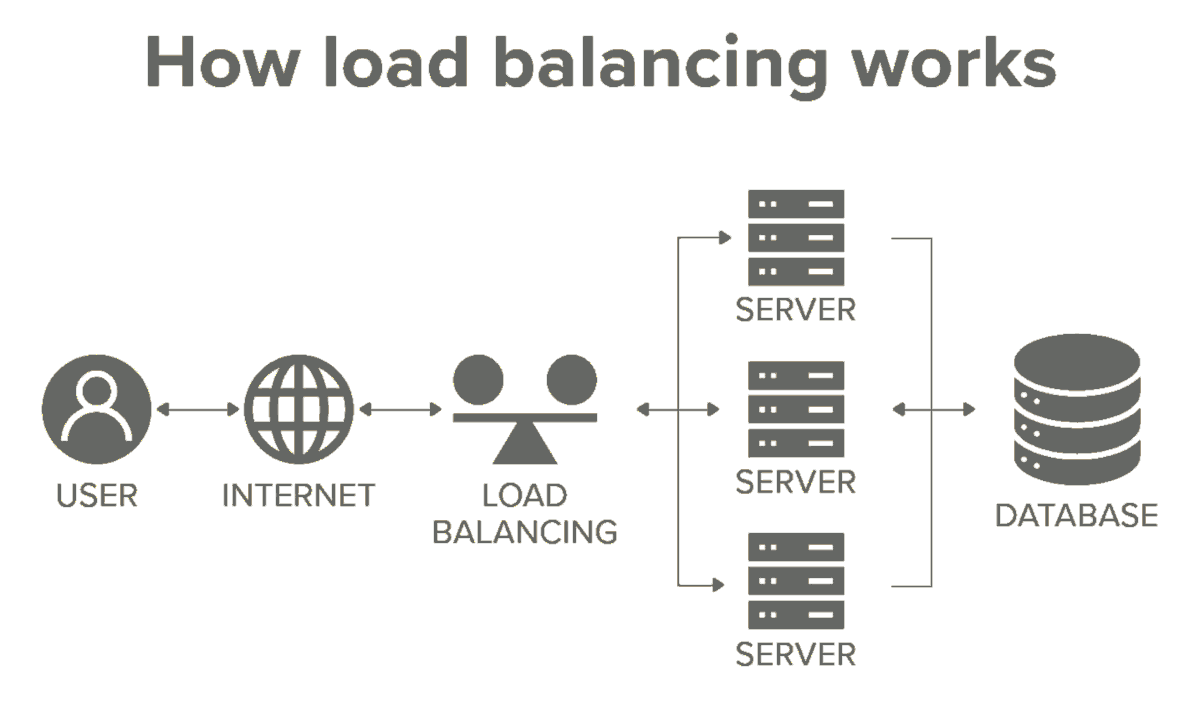

HOW-TO: Load-Balancing – Best Ways Explained, DNS Configuration Example

Many among us believe that they know the answers... but some might learn something and save a lot of money. First, let's review what problems load-balancing is expected to resolve, and what features are added as a bonus:

- scability, distributing the load to backend servers,

- fail-safety, continue despite backend maintenance,

- better performance, lower latency (cache, pick fit backend),

- better security, hiding and protecting backend servers,

- heath checks of backend servers (pings, response time),

- offloading: TLS termination, caching and compression.

There are certainly vendors offering more extra-bonus features, but let's focus on the main purpose of a load-balancer reverse-proxy.

1. scalability: serving many clients, most of them idle (pausing to read contents).

A load-balancer distributes the load to several backend servers, right. But why not do it with DNS?

To survive real-life (D)DoS attacks escaping APACHE, NGINX, LITESPEED, and HAPROXY WAFs, a load-balancer reverse-proxy (like all frontend servers) must use small timeouts (or, even better, adaptive ones):

97% idle clients (real users visiting pages, pausing to read them and/or fill forms) means that a server has 3% active clients, which, on 1 million clients, gives 33k active clients.

So the tale of maintaining millions of active connections is a fallacy – whether you are a load-balancer or a backend server (Linux sets a maximum of 1,048,576 open files or TCP connections, limiting the joy anyway).

And, given the ridiculously low performance of the (self-proclaimed) "fastest load-balancer in the world" (HAPROXY), the expected gains, when "accelerating" NGINX are very limited (unless caching takes place and NGINX is trapped in long and slow FCGI streamed content-generation). So, HAPROXY can only slow-down G-WAN backends by several orders of magnitude (not an improvement).

DNS is a much more efficient (and free) way to resolve that problem (upfront, even before client connections reach the website).

2. fail-safety: users expect websites to always be available.

Sure, but this is also true with the load-balancer. To avoid a single point of failure, you will need redundancy and therefore several load-balancer reverse-proxys.

To avoid congestion, you will need many load-balancers (especially if the load-balancers like HAPROXY are hundreds of times slower than a single G-WAN backend server).

Here also, DNS is a much more efficient (and cheaper) alternative to a load-balancer application.

3. performance: users expect websites to respond quickly.

A load-balancer reverse-proxy not doing caching will have to be much faster than the backend servers it claims to "accelerate", but HAPROXY is not much faster than NGINX – and immensely slower than G-WAN.

So the only way for a HAPROXY frontend to accelerate NGINX is to have many NGINX backends generating a lot of slow (uncached) contents (G-WAN would generate, cache and serve contents massively faster than HAPROXY and NGINX combined, whatever the number of backends).

Therefore, an efficient caching reverse-proxy or caching App. Server (G-WAN doing both) delivers much better performance than a load-balancer (which then is pointless to boost performance).

And, here again, DNS is a much more efficient (and cheaper) alternative to a load-balancer application, especially if your backend servers are able to do caching properly.

4,5,6. security, health checks, offloading (TLS , caching, compression).

All of these extra features are likely to be redundant with any capable backend server.

So, using a load-balancer makes sense if (and only if) it does the job better than the backend servers. At the time we wrote this page, HAPROXY had a CVE (Common Vulnerabilities and Exposures) count of 57 (click the link to know the current count). What's the point of adding yet another unsafe component to the mind-bogling 291 CVEs of NGINX?

Health checks are done by NGINX and G-WAN by relaunching a locked or crashing child process – so load-balancer reverse-proxys are redundant (free DNS-based load-balancing, can keep backend latency into account to pick fit backends).

G-WAN (2009) passed 17 years without a single CVE, and it will be even safer now it uses SLIMalloc to be "memory-safe" (an exclusivity, like G-WAN's clean CVE record or G-WAN's performance).

Offloading a given task to the load-balancer is only desirable if the load-balancer is actually much faster than the backend servers (we have seen that HAPROXY is only marginally faster than NGINX (and massively slower than G-WAN), so there's no benefit at adding another layer of inefficiencies and vulnerabilities if you can avoid it).

Since DNS cannot be excluded from the delivery chain, it is a much safer (and cheaper) alternative to a load-balancer application.

For profit, some have a passion to tell the opposite of what reality is – that's how, eventually, most die helplessly trying to resolve ever-growing problems that should have never existed in the first place (gratuitous inefficiency, complexity, incompatibility, vulnerability, etc.).

As these extra costs have become a deadly threat to economic life for the vast majority, it is more than time to focus on structural solutions. Individually and collectively, and can (and therefore should) do much better than today's sorry state of things.

DNS will not limit how many backend servers you will use (nor how to pick one: server response times, bandwidth, least connections)... but it allows you to deploy them in different datacenters (introducing network fail-safety), and even different countries by geo-locating clients (providing the lowest possible latency, worldwide).

And guess what, that's exactly what Cloudflare does:

"Cloudflare Load Balancing is a DNS-based load balancing solution that actively monitors server health via HTTP/HTTPS requests. Based on the results of these health checks, Cloudflare steers traffic toward healthy origin servers and away from unhealthy servers. Cloudflare Load Balancing also offers customers who reverse proxy their traffic the additional security benefit of masking their origin server's IP address."

So, you might do (several order of magnitude) better with a decent caching reverse-proxy or caching backend server – without a load-balancer reverse-proxy.

Most of the time, with DNS-based load-balancing, fast, scalable, backend servers (or caching reverse-proxies) are all you need. You will spend much less money (in CAPEX and OPEX), and waste much less time in expensive and boring tasks (configuration, monitoring, maintenance, data-breach and incident recovery).

Configuring DNS Servers for Load-Balancing

1. Round Robin DNS

Rotates through a list of IP addresses for domain resolution:

example.com. IN A 192.0.2.1 example.com. IN A 192.0.2.2 example.com. IN A 192.0.2.3

2. Weighted Round Robin

Assigns weights to different servers based on their capacity:

server1.example.com. IN A 192.0.2.1

server2.example.com. IN A 192.0.2.2

IN A 192.0.2.3

example.com. IN CNAME server1.example.com.

IN CNAME server2.example.com. ; 2x weight

3. GeoDNS/GeoIP Routing

Routes users to the nearest server based on their geographic location:

am.example.com. IN A 198.5.100.2 ; Americas eu.example.com. IN A 203.2.113.2 ; Europe as.example.com. IN A 213.2.113.2 ; Asia

4. Health Checking and Failover

Implement health checks to automatically remove unhealthy servers from rotation:

- Check DNS and backend servers status and health,

- Update DNS records dynamically based on health status,

- Set proper TTL values for quick failover.

5. Best Practices

- Set proper TTL values (balance between failover speed and DNS load),

- Implement monitoring and alerting, testing failover procedures regularly,

- Check if a combination of DNS and reverse-proxy load-balancing performs better in your case.

DNS-based Load-Balancing, Pros and Cons

DNS "GSLB" (Global Server Load Balancing) is more advanced, complex and expensive than the mechanical DNS "Round-Robin" list traversal as it removes/adds (un)available backends from the list, and may use server load sensors to weight them in the list; "partitioning" means per backend IP address: a client is always processed by the same backend):

DNS round-robin | DNS GSLB direct-to-node | DNS GSLB + Layer 7 Reverse Proxy | |

|---|---|---|---|

Use case | Fast, easy, no extra costs | huge traffic, several datacenters | medium to large traffic, several datacenters |

Method | Several DNS A records | DNS GSLBs + health checks + load-sensors | DNS GSLB + several reverse proxies |

Vector | DNS responses | DNS responses | DNS responses & TCP/UDP traffic |

Where? | DNS | DNS GSLB | DNS GSLB + Reverse Proxy |

Scheduling | Round robin | Weighted: round robin | Weighted: least connections or partitioning |

Granularity | Poor | Good | Excellent |

Complexity | Low | Medium | High |

TLS/WAF offloading | No | No | Yes |

Traffic management | No | Good | Excellent |

Dynamic weighting | No | Yes | Yes |

Session persistence | No | Topology-based | Advanced |

Health checks | No | Good | Excellent |

Scalability | Poor | Excellent | Good |

Cost | Low | Medium | High |

Note that the above metrix are for a standard (passive) DNS server. If G-WAN provided an active DNS protocol handler then DNS-based load-balancing would beat the more expensive alternatives – on all the criterias.

But, given G-WAN's performance with a and without caching, a G-WAN load-balancer is certainly a game-changer – THEN the load-balancer would really accelerate backend servers (like today's G-WAN caching Reverse-Proxy). In the meantime, you have DNS: if it works for Clouflare, it will work for you.

Yet new reasons to hire or sponsor G-WAN to implement new features!